Difference between revisions of "Musical Machine Learning"

Nsbradford (talk | contribs) (→Musical Machine Learning) |

|||

| (7 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Musical Machine Learning= | =Musical Machine Learning= | ||

| − | + | [[File:Confutatis.png|600px]] | |

| − | + | ||

| − | + | ==Overview== | |

| − | + | ||

| − | + | This project created a foundation for future work by WPI students in music information retrieval and machine learning. A Python system was first constructed to extract variable-length features from audio files. The problem of determining song structure was then approached with both supervised and unsupervised learning algorithms, resulting in a novel method for automated structure analysis. Finally, the groundwork was laid for the use of deep neural networks for musical composition. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | = | + | ==TODO List== |

| − | == | ||

| − | |||

| + | *Large additions | ||

| + | **Cluster an entire song in 1-s segments, then use a Gaussian KDE to smooth out classification. This can then be used to actually mark "segments" of a song | ||

| + | **Train a massive Deep Neural Net to try to automatically distinguish between parts | ||

| + | **Composition | ||

| + | ***Create a LSTM recurrent neural net to learn from MIDI input | ||

| + | ***Combine with Verse/Chorus algorithm/work to give songs more structure | ||

| + | ***Create website where people can upload a MIDI file, and then listen to RNN improvise over it | ||

| + | *Small improvements to Verse/Chorus system: | ||

| + | **Use SVM with linear kernel (instead of RBF) as a better approximation of "true" clustering | ||

| + | **Find additional features other than spectral centroid and zero-crossing rate | ||

| + | **IPython notebook demo (https://ipython.org/notebook.html) | ||

| + | ***Play with how the features are generated and averaged | ||

| + | **Include outlier detection in the data preprocessing stage | ||

| + | ***(http://scikit-learn.org/stable/modules/outlier_detection.html) | ||

| + | **Optimize the different classifiers | ||

| + | **Optimize song loading times (store in database? alternative form?) | ||

| + | **Add option of multiple sections (bridge?) | ||

| + | ==Help Connecting to Repository== | ||

| + | All files for this project are stored on the secured repository below. Contact Manzo for access once you've made an account on the Git (see Help). | ||

<br> | <br> | ||

| + | Main project repository address: | ||

<br> | <br> | ||

| + | http://solar-10.wpi.edu/ModalObjectLibrary/MachineLearning [git@solar-10.wpi.edu:ModalObjectLibrary/MachineLearning.git] | ||

| + | |||

| + | ==WPI Student Contributors== | ||

| + | ===2016=== | ||

| + | Nicholas S. Bradford | ||

| + | |||

<br> | <br> | ||

| − | [[Category: Advisor:Manzo]] | + | [[Category: Advisor:Manzo]][[Category:Interactive Systems]] |

| + | <!--[[Category:Featured]]--> | ||

Latest revision as of 05:53, 5 May 2016

Contents

Musical Machine Learning

Overview

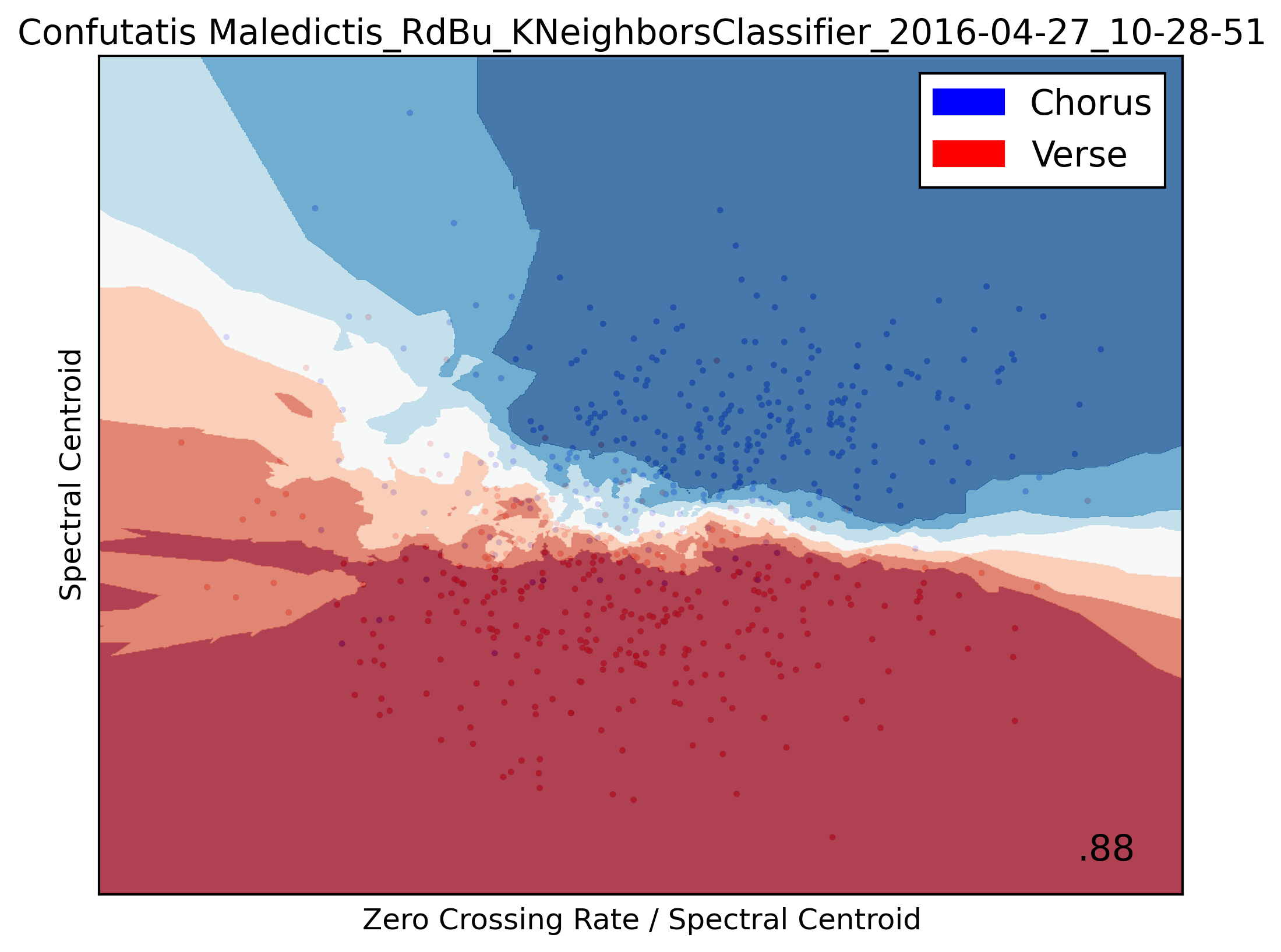

This project created a foundation for future work by WPI students in music information retrieval and machine learning. A Python system was first constructed to extract variable-length features from audio files. The problem of determining song structure was then approached with both supervised and unsupervised learning algorithms, resulting in a novel method for automated structure analysis. Finally, the groundwork was laid for the use of deep neural networks for musical composition.

TODO List

- Large additions

- Cluster an entire song in 1-s segments, then use a Gaussian KDE to smooth out classification. This can then be used to actually mark "segments" of a song

- Train a massive Deep Neural Net to try to automatically distinguish between parts

- Composition

- Create a LSTM recurrent neural net to learn from MIDI input

- Combine with Verse/Chorus algorithm/work to give songs more structure

- Create website where people can upload a MIDI file, and then listen to RNN improvise over it

- Small improvements to Verse/Chorus system:

- Use SVM with linear kernel (instead of RBF) as a better approximation of "true" clustering

- Find additional features other than spectral centroid and zero-crossing rate

- IPython notebook demo (https://ipython.org/notebook.html)

- Play with how the features are generated and averaged

- Include outlier detection in the data preprocessing stage

- Optimize the different classifiers

- Optimize song loading times (store in database? alternative form?)

- Add option of multiple sections (bridge?)

Help Connecting to Repository

All files for this project are stored on the secured repository below. Contact Manzo for access once you've made an account on the Git (see Help).

Main project repository address:

http://solar-10.wpi.edu/ModalObjectLibrary/MachineLearning [git@solar-10.wpi.edu:ModalObjectLibrary/MachineLearning.git]

WPI Student Contributors

2016

Nicholas S. Bradford