Difference between revisions of "Sight to Sound"

(Created page with "This is a sight-to-sound application; something that takes a camera input and outputs a spectrum of audio frequencies. The creative task is to choose a mapping from 2D pixel-s...") |

|||

| (18 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| + | === Sight to Sound === | ||

| + | |||

This is a sight-to-sound application; something that takes a camera input and outputs a spectrum of audio frequencies. The creative task is to choose a mapping from 2D pixel-space to 1D frequency-space in a way that could be meaningful to the listener. Of course, it would take someone a long time to relearn their sight through sound, but the purpose of this project is just to ''implement'' the software. | This is a sight-to-sound application; something that takes a camera input and outputs a spectrum of audio frequencies. The creative task is to choose a mapping from 2D pixel-space to 1D frequency-space in a way that could be meaningful to the listener. Of course, it would take someone a long time to relearn their sight through sound, but the purpose of this project is just to ''implement'' the software. | ||

| − | Used here, the mapping from pixels to frequencies is the Hilbert Curve. This particular mapping is desirable | + | Used here, the mapping from pixels to frequencies is the Hilbert Curve. This particular mapping is desirable for two reasons: first, when the camera dimensions increase, points on the curve approach more precise locations, tending toward a specific point. So increasing the dimensions makes better approximations of the camera data, which becomes "higher resolution sound" in terms of audio-sight. Second, the Hilbert Curve maintains that nearby pixels in pixel-space are assigned frequencies near each other in frequency-space. By leveraging these two intuitions of sight, the Hilbert curve is an excellent choice for the mapping for this hypothetical software. |

| + | |||

| + | |||

| + | The video below is a demonstration in Max. For a better understanding of this concept, check out this video by YouTube animator ''3Blue1Brown'': [https://www.youtube.com/watch?v=3s7h2MHQtxc '''link'''] | ||

| + | |||

| + | |||

| + | <htmltag tagname="iframe" id="ensembleEmbeddedContent_aELPpo0eJEiNwcKrpzACnQ" src="https://video.wpi.edu/hapi/v1/contents/a6cf4268-1e8d-4824-8dc1-c2aba730029d/plugin?embedAsThumbnail=false&displayTitle=false&startTime=0&autoPlay=false&hideControls=true&showCaptions=false&width=276&height=216&displaySharing=false&displayAnnotations=false&displayAttachments=false&displayLinks=false&displayEmbedCode=false&displayDownloadIcon=false&displayMetaData=false&displayCredits=false&displayCaptionSearch=false&audioPreviewImage=false&displayViewersReport=false" title="Ben Gobler Final Project" frameborder="0" height="216" width="276" allowfullscreen></htmltag> | ||

| + | == Here is a walkthrough of the code: == | ||

| − | The | + | {|style="margin: 0 auto;" |

| + | | [[File:img_spacer.png|100px|frameless|]] | ||

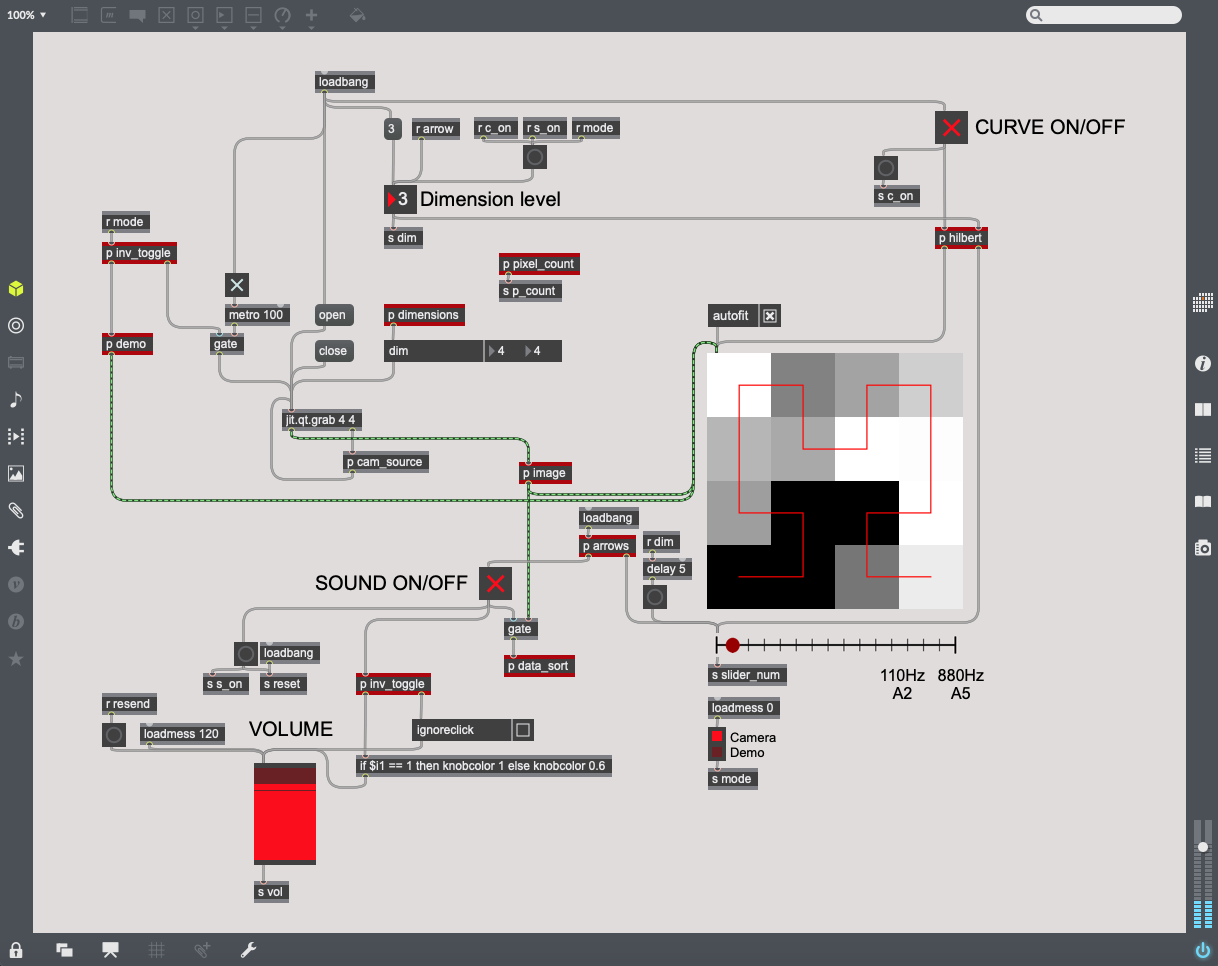

| + | | [[File:Main.png|350px|thumb|This is the main patcher. It looks complicated, but there are only two primary paths running.]] | ||

| + | |} | ||

| + | {|style="margin: 0 auto;" | ||

| + | | [[File:img_spacer.png|100px|frameless|]] | ||

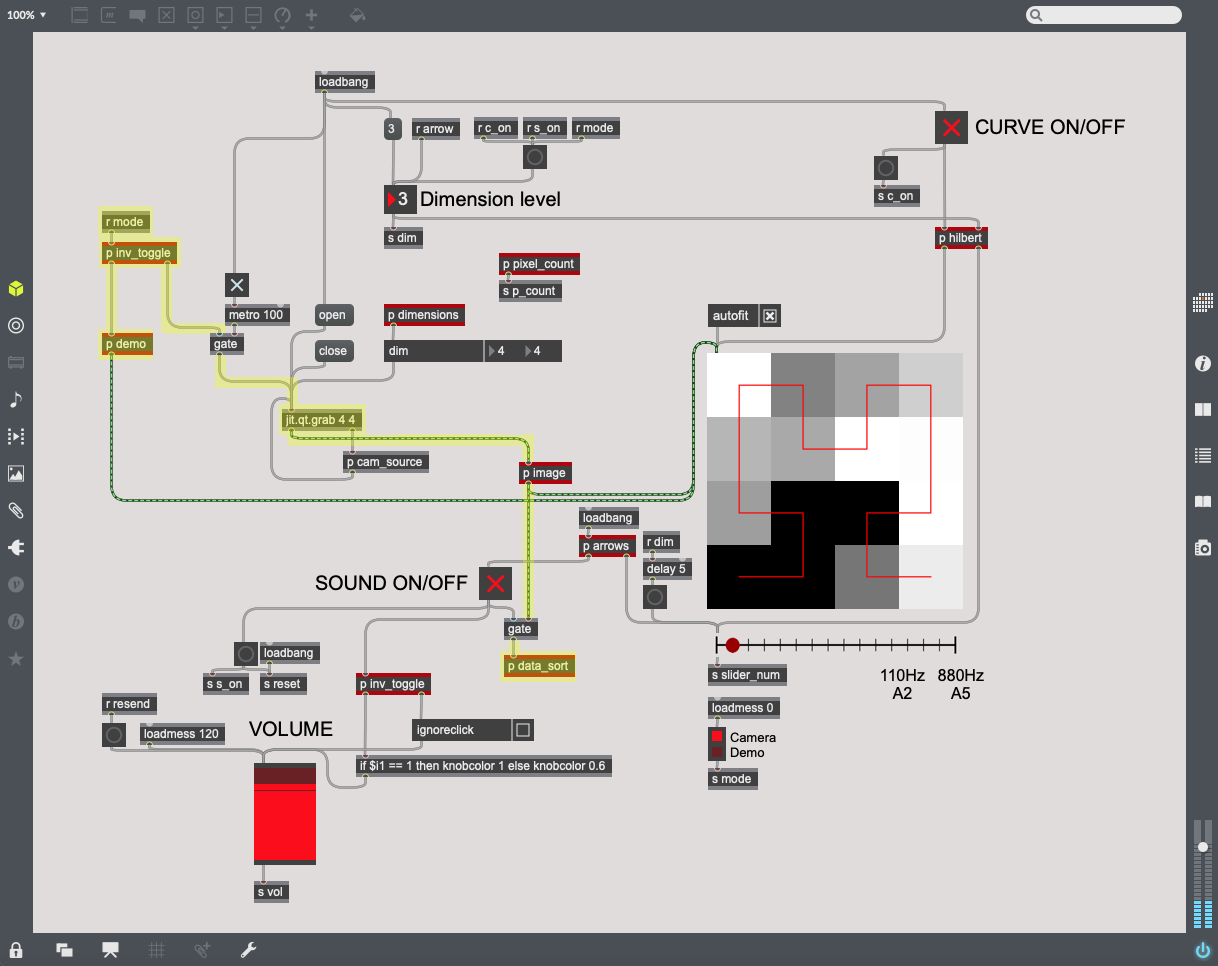

| + | | [[File:Mode.png|350px|thumb|This path controls the current mode. The left branch runs the demo mode, and the right branch runs the camera mode using camera data from ''jit.qt.grab''. The mode is directed by the subpatcher ''p inv_toggle'', which acts as a router between 0 and 1, but with toggles. This toggle is controlled by the radiogroup with the two mode options.]] | ||

| + | | [[File:img_spacer.png|100px|frameless|]] | ||

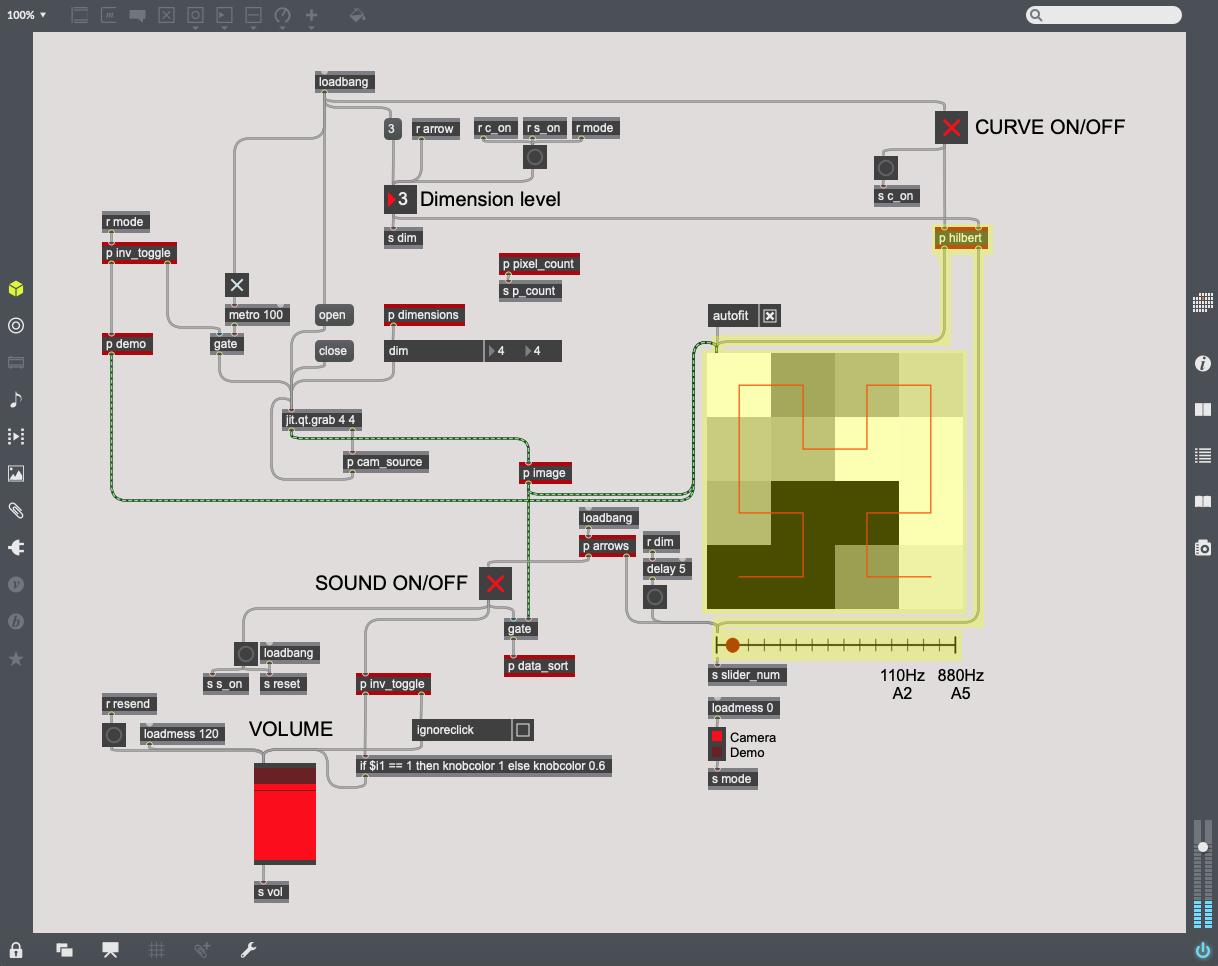

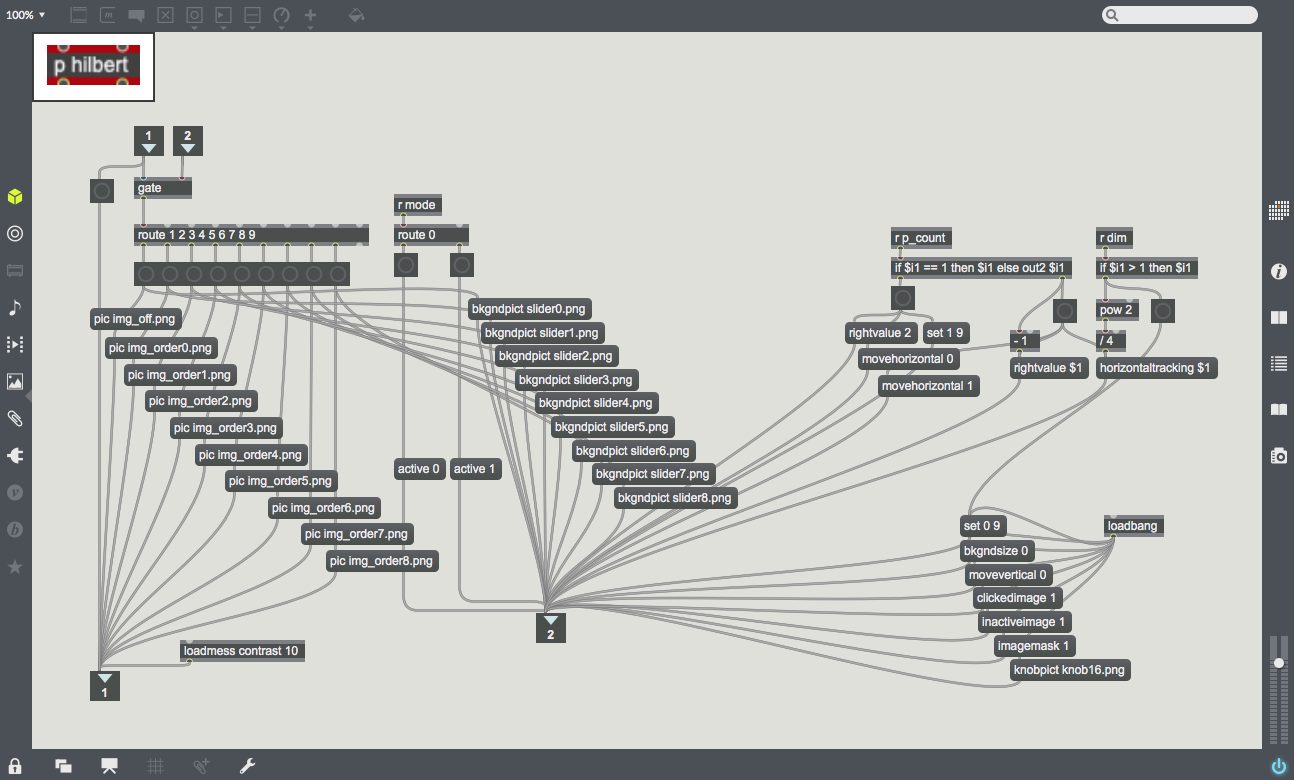

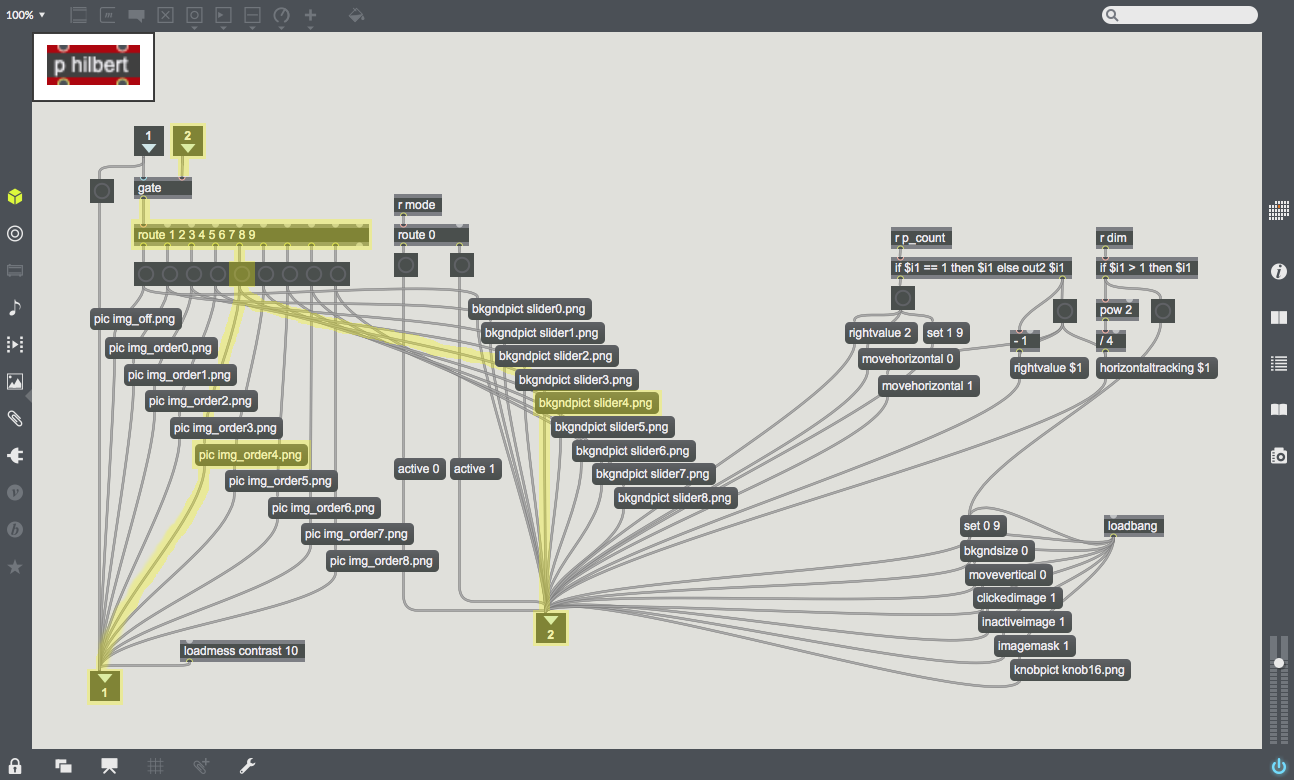

| + | | [[File:Hilbert.png|350px|thumb|This path controls the Hilbert Curves and the sliders for each dimension level. When the dimension level changes, the subpatcher ''p hilbert'' sends the proper images of the Hilbert Curve (''pwindow'') and the slider (''pictslider'').]] | ||

| + | |} | ||

| + | {|style="margin: 0 auto;" | ||

| + | | [[File:img_spacer.png|100px|frameless|]] | ||

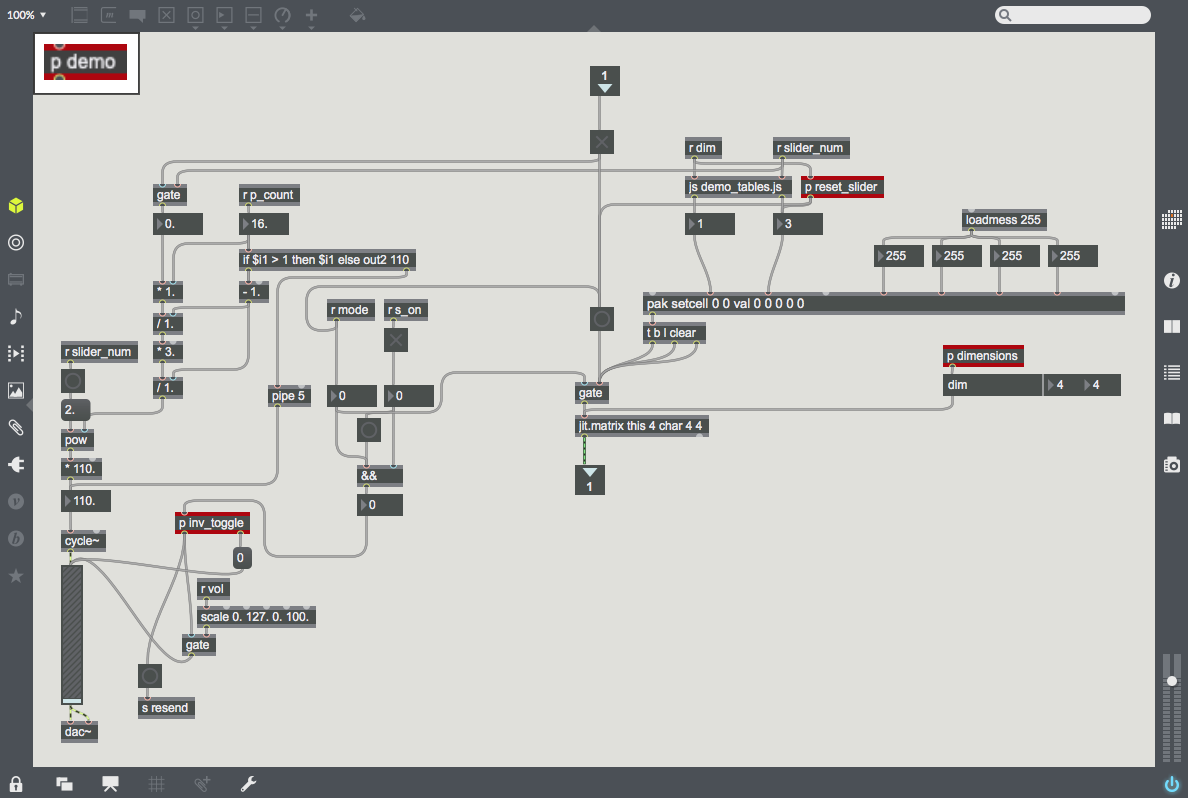

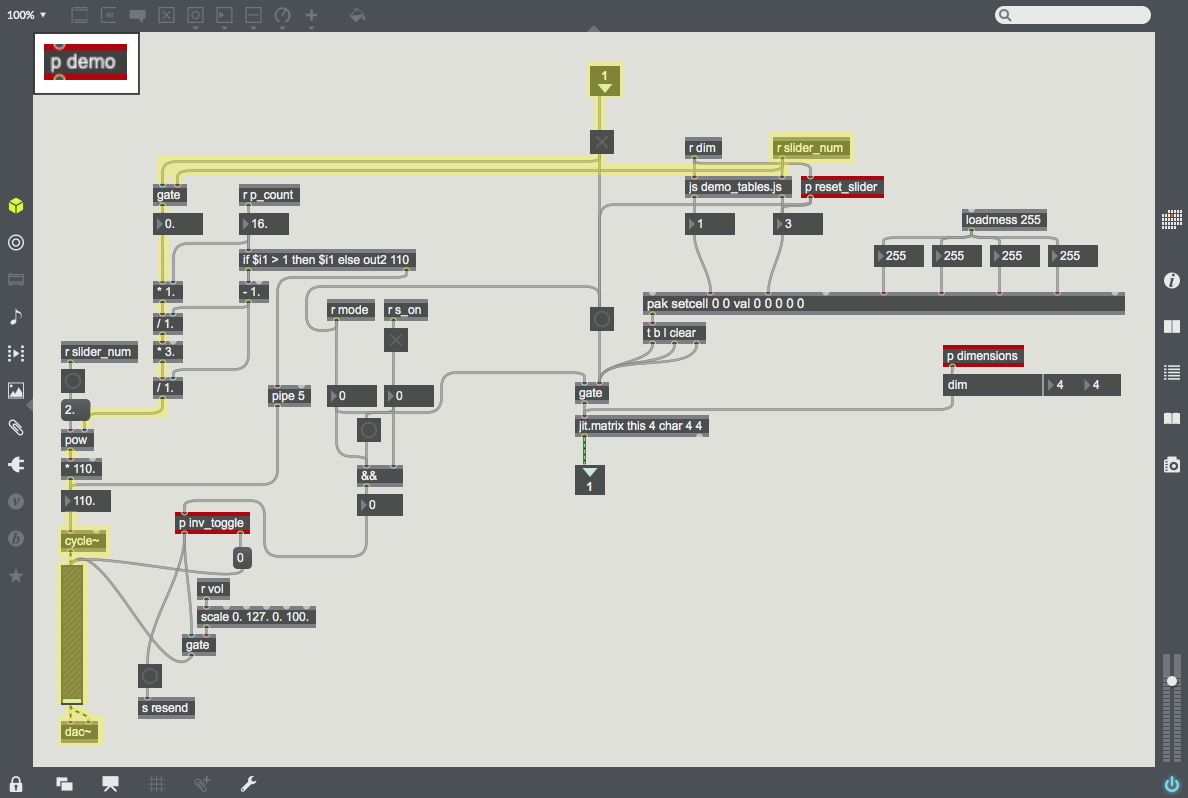

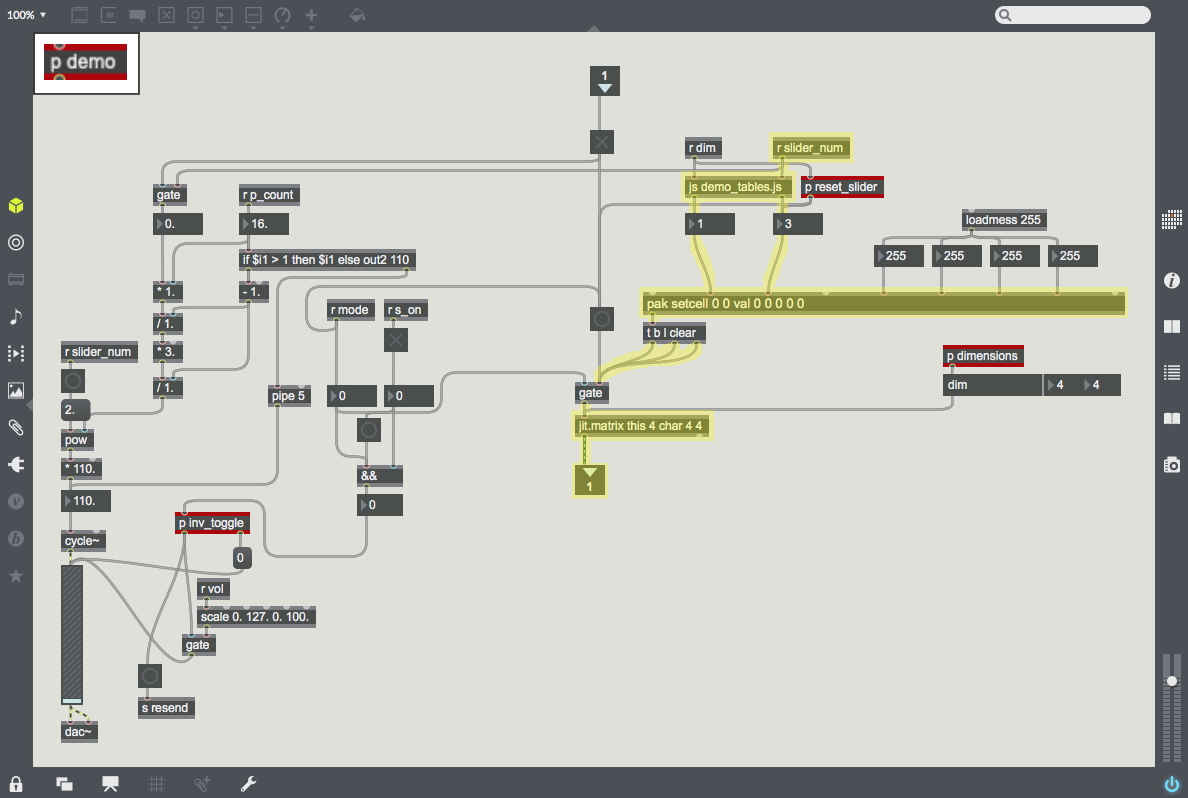

| + | | [[File:Demo.png|300px|thumb|This subpatcher ''p demo'' runs the demo mode.]] | ||

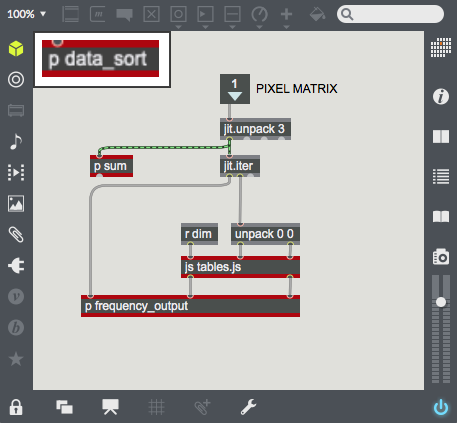

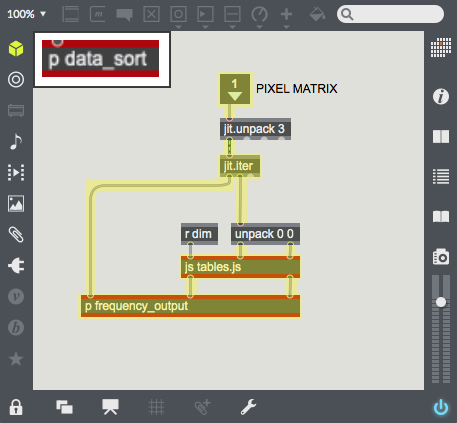

| + | | [[File:Data_sort.png|100px|thumb|This subpatcher ''p data_sort'' runs the camera mode.]] | ||

| + | | [[File:img_spacer.png|100px|frameless|]] | ||

| + | | [[File:Hilbert_setup.png|300px|thumb|This subpatcher ''p hilbert'' sends the proper Hilbert Curves and sliders from image files.]] | ||

| + | | [[File:img_spacer.png|100px|frameless|]] | ||

| + | |} | ||

| + | {|style="margin: 0 auto;" | ||

| + | | [[File:Demo_audio.png|200px|thumb|The left half of ''p demo'' uses the place along the Hilbert Curve (which is the slider value) to calculate and output that point's frequency. Then the frequency is sent through ''cycle~'' and ''gain~'', and finally gets outputted through ''dac~''.]] | ||

| + | | [[File:Hilbert_to_Point.png|200px|thumb|The right half of ''p demo'' uses javascript to take the place along the Hilbert Curve (which is the slider value) and output the xy-coordinates of the corresponding pixel. Then, it uses ''pak'' and ''jit.matrix'' to generate a matrix of black pixels with a white pixel at that point (x,y).]] | ||

| + | | [[File:Point_to_Hilbert.png|100px|thumb|''jit.iter'' splits the camera data into pixel coordinates and their brightness values. The javascript file takes each pixel's xy-coordinates and outputs that pixel's place on the Hilbert Curve (from first to last, when unraveled). These values are sent into the subpatcher below.]] | ||

| + | | [[File:img_spacer.png|50px|frameless|]] | ||

| + | | [[File:Image_selection.png|300px|thumb|With the dimension level as an input, it gets routed and sent to a corresponding Hilbert Curve (''pic'') and slider (''bkgndpict''). On the far right are parameters for the slider, which is an implementation of the object ''pictslider''.]] | ||

| + | | [[File:img_spacer.png|50px|frameless|]] | ||

| + | |} | ||

| + | {|style="margin: 0 auto;" | ||

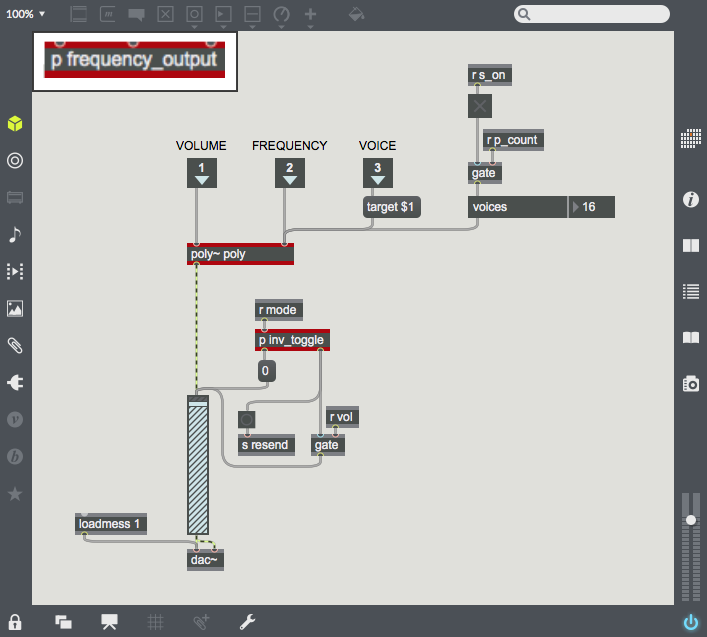

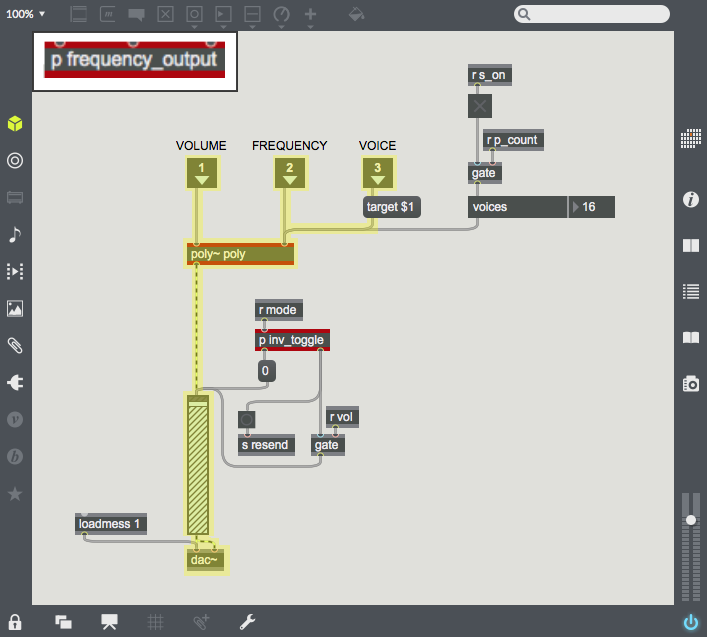

| + | | [[File:This_poly.png|300px|thumb|This subpatcher ''p frequency_output'' outputs the frequencies in camera mode.]] | ||

| + | |} | ||

| + | {|style="margin: 0 auto;" | ||

| + | | [[File:Camera_audio.png|300px|thumb|Each incoming pixel's location on the Hilbert Curve and its brightness are sent into ''poly~'' with a unique voice. This way, every pixel can output frequencies in their own clones of ''poly~'''s patcher. The results are sent back into a ''gain~'' control, and finally get outputted through ''dac~''.]] | ||

| + | |} | ||

| + | {|style="margin: 0 auto;" | ||

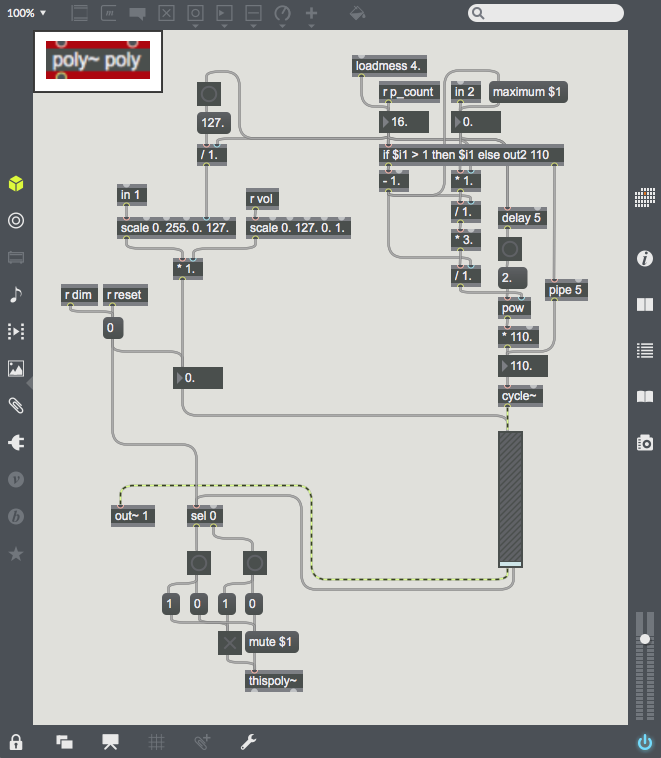

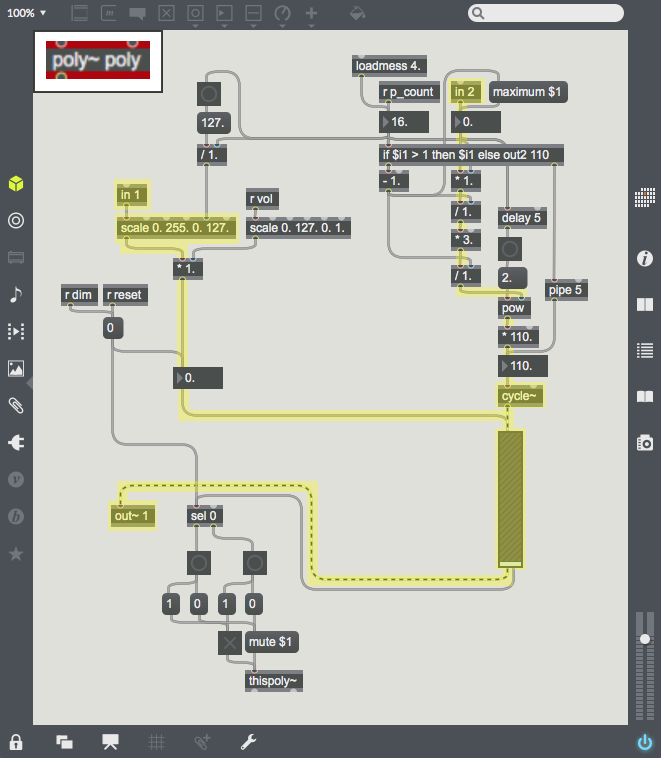

| + | | [[File:Poly.png|300px|thumb|This is the ''poly'' patcher. It calculates the volumes and frequencies from the camera data.]] | ||

| + | |} | ||

| + | {|style="margin: 0 auto;" | ||

| + | | [[File:Volumes_and_Frequencies.png|300px|thumb|On the left, it uses the pixel's brightness value to calculate the volume. On the right, it uses the pixel's location on the Hilbert Curve to calculate the point's frequency. The two are combined with ''cycle~'' and ''gain~'', and then get sent back to ''p frequency_output'' with ''out~''.]] | ||

| + | |} | ||

| + | To view access the full project, download the .zip file below. It contains the original file ''Ben_Gobler_Final_Project.maxpat'', along with the referenced files (images, javascript, texts). | ||

| + | [[File:Ben_Gobler_Final_Project_Folder.zip]] | ||

| − | + | ==== Ben Gobler<br />October 2019 ==== | |

Latest revision as of 15:24, 10 October 2019

Sight to Sound

This is a sight-to-sound application; something that takes a camera input and outputs a spectrum of audio frequencies. The creative task is to choose a mapping from 2D pixel-space to 1D frequency-space in a way that could be meaningful to the listener. Of course, it would take someone a long time to relearn their sight through sound, but the purpose of this project is just to implement the software.

Used here, the mapping from pixels to frequencies is the Hilbert Curve. This particular mapping is desirable for two reasons: first, when the camera dimensions increase, points on the curve approach more precise locations, tending toward a specific point. So increasing the dimensions makes better approximations of the camera data, which becomes "higher resolution sound" in terms of audio-sight. Second, the Hilbert Curve maintains that nearby pixels in pixel-space are assigned frequencies near each other in frequency-space. By leveraging these two intuitions of sight, the Hilbert curve is an excellent choice for the mapping for this hypothetical software.

The video below is a demonstration in Max. For a better understanding of this concept, check out this video by YouTube animator 3Blue1Brown: link

Here is a walkthrough of the code:

To view access the full project, download the .zip file below. It contains the original file Ben_Gobler_Final_Project.maxpat, along with the referenced files (images, javascript, texts). File:Ben Gobler Final Project Folder.zip